HTERM

Purpose

HTERM is a "dumb terminal" program which implements hardware flow control and rudimentary handling of UTF-8 encoding and ANSI escapes.

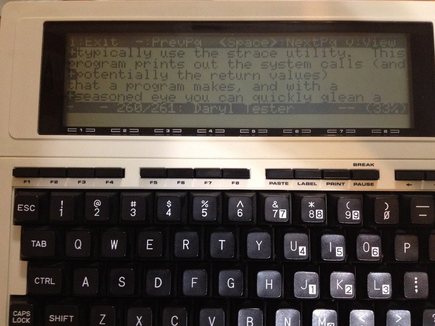

Screen Shots: HTERM to Linux

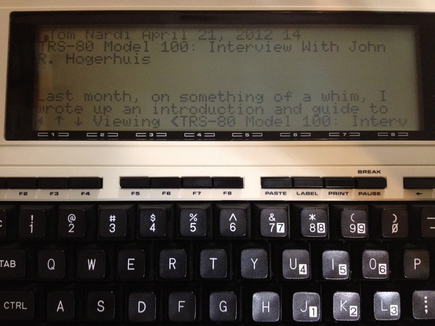

Powerbase Interview via W3M

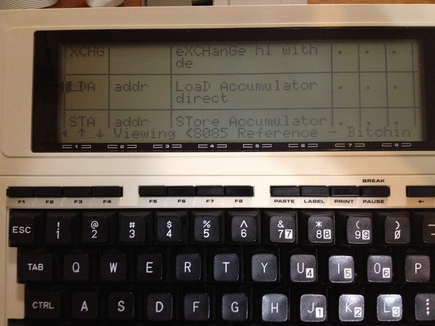

HTML Table from Bitchin100 wiki via W3M

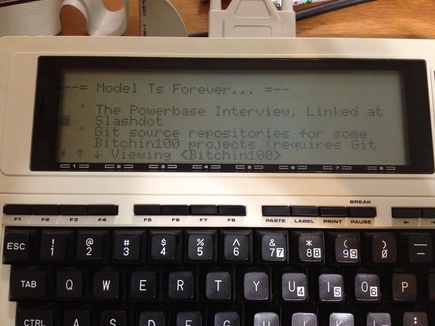

Bitchin100.com Browsed via W3M

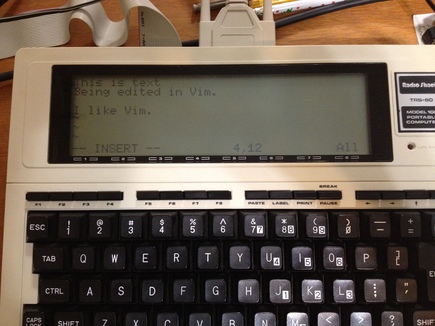

Editing a File with Vim

Reading Mailing List with Mutt

Source Code

Git repo: http://bitchin100.com/pub-git/hterm.git/

Latest Build

(No released build with Unicode support yet)

M100/T102

http://bitchin100.com/files/m10x/HTERM.CO

http://bitchin100.com/files/m10x/HTERM.lst

T200

http://bitchin100.com/files/t200/HTERM.CO

http://bitchin100.com/files/t200/HTERM.lst

Loading HTERM

The process for loading HTERM is the same as a typical .CO file.

Unicode Support

UTF-8 characters are an encoding of Unicode. Unicode characters are between 8 and 32 bits. It adopts the 7-bit ASCII characters and extends from there.

HTERM supports UTF-8, since these days it is the default character encoding for Linux and the web. Several command line programs of interest, like w3m and mutt generate UTF-8 encoded characters. w3m is a text mode web browser. When it renders HTML tables, it uses the Unicode line drawing glyphs, the character pi, the copyright symbol, etc. Mutt is a email client. When it represents threads, it uses the line drawing characters to represent something like a tree of emails.

In UTF-8, ASCII characters ($00-$7F) are the same as ever. But higher characters are represented as two to six byte strings in the following format:

The first byte in a UTF-8 encoded character has a run of 1's in the highest bits. The length of the run indicates the number of octets comprising the encoded character. This run of 1's is always followed by a zero. The rest of the bits in the first octet are "payload" of the encoding, that is, the most significant bits of the Unicode value being encoded.

Following octets start with 10, followed by the payload. Octet order of the payload bits, if prefix bits of each octet are discarded, is in MSB->LSB order.

(From Wikipedia)

| Bits | Last code point | Byte 1 | Byte 2 | Byte 3 | Byte 4 | Byte 5 | Byte 6 |

|---|---|---|---|---|---|---|---|

| 7 | U+007F | 0xxxxxxx | |||||

| 11 | U+07FF | 110xxxxx | 10xxxxxx | ||||

| 16 | U+FFFF | 1110xxxx | 10xxxxxx | 10xxxxxx | |||

| 21 | U+1FFFFF | 11110xxx | 10xxxxxx | 10xxxxxx | 10xxxxxx | ||

| 26 | U+3FFFFFF | 111110xx | 10xxxxxx | 10xxxxxx | 10xxxxxx | 10xxxxxx | |

| 31 | U+7FFFFFFF | 1111110x | 10xxxxxx | 10xxxxxx | 10xxxxxx | 10xxxxxx | 10xxxxxx |

Anyway, doing a mapping from M100 to Unicode, I found that almost all characters can be represented with Unicode characters below $FFFF.

So I made a mapping, I have mapped about 150 characters. Note that this is larger than 128. This is because multiple Unicode characters map to some M100 characters. For example both left-double-quote and right-double-quote map to the same Model 100 character.

Outbound, going from M100->Unicode is fast and easy. Everything 127 and below passes as-is. For the high numbered characters you just need a 128 character table. Subtract 128 from the character, and look up a 16-bit Unicode value. Then, encode as UTF-8 and send to the peer. Alternatively, one could maintain the character to send in UTF-8 format, but this seemed wasteful.

Inbound, detecting and decoding UTF-8 characters was a bit of work. But because I only supported 16-bit range of Unicode, I only needed to handle 2 and 3 octet UTF-8 characters. So, two subroutines to decode depending on the detected run length.

Once it's decoded, you now have a 16-bit Unicode value. But what to do with it? The goal is, for each incoming Unicode character, to look up an approximate M100 equivalent and display it. If there is no match at all, just show a stand-in character (I used the checkerboard pattern).

But unlike the M100->Unicode case, you can't make a direct lookup table on the M100. 16-bit range is 64K which is twice the RAM in the M100.

An alternative is to sort the supported 16-bit Unicode character values, and then do a binary search for it. This has log-base-2 of n to find the character of interest, or to discover it is unknown to HTERM. I didn't like that idea either... I wanted constant time efficiency.

So I knew I could do a bucket hash or linear probing on an array with a simple hash function. But I figured it would be fun to do better... could I find a perfect hash function? That is, for every mapped Unicode character, hashing a given 16-bit Unicode value, it would always map to exactly the right entry in my table.

I found something called a Pearson's Hash. This relies on a 256-byte lookup table L. In my case, I pick a function, and look up the most signficant and least signicant byte of the Unicode value in the table, and XOR the values I get together. This gives me an index into my Unicode->M100 table. If I get a character u, I calculate L[u/256] XOR L[u AND 255], or L[u>>8] ^ L[u&0xff]. The resulting value is the array index, calculated in constant time... no linear search or binary search, just a calculation.

The problem of course is coming up with the look up table L. Nothing I could find said anything about how to make such a table. Left, I guess as "an exercise for the reader." I tried to do it by hand, but ended up with collisions (two Unicode values mapping to the same array index).

So I wrote Perl script to brute force it. The algorithm the Perl script uses is to create an array of the values 0-255. Then it randomly swaps all positions in the table.

Next, it walks through many further reordered arrangements in an iterative fashion. I made an ad hoc "fitness function" and used something like Lamarckian evolution to evolve a useful arrangement / seek a nearby minimum. Each iteration the script takes the ordering and applies the fitness function to it. If it improves it (decreases its score) then the new ordering survives into the next iteration. If it doesn't improve it, then I revert to the previous order for the next iteration.

The fitness function has various weights for different unwanted things. For example, a collision (two Unicode values mapping to the same spot) has a weight.

Anyway, to my surprise, once I got the Perl script debugged, and fiddled with the weights, it came up with usable look up tables VERY quickly. There are probably some other ways of finding look up tables, but so far the Perl script is working. Obviously you can't search through all orderings... thats 256 factorial (big). So you need to do some kind of search.